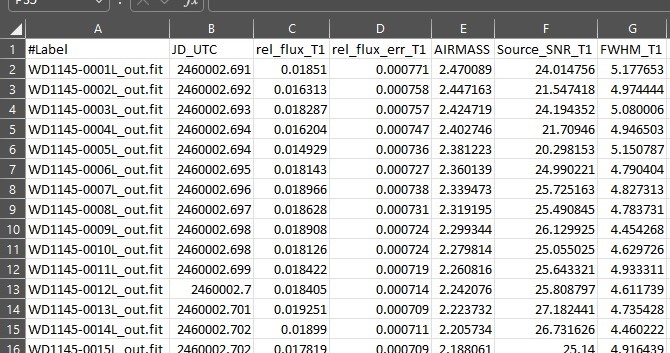

Could you please explain the time scales used in AIJ? For example, attached is part of a file from an AIJ run. As you can see, the JD_UTC is specified to three decimal places, which means that each row of data is 86.4 seconds from the previous row. However, in the observation run itself, I made an exposure every 30 seconds. So, if I made an exposure at 2460002.691 (as shown on the first row), the next exposure would have taken place at 2460002.69134722, but the next row in the Measurements table shows the JD for 2460002.692. So, the JD_UTC used for reporting data in the Measurements Table did not correspond precisely to the JD_UTC when actual measurements were made. I found the similar situation using BJD_TDB time.

If I want higher precision (equal to my exposure cadence) to be reported in the Measurements Table, is there a way to configure that in AIJ?

Thanks,

Ed